Positive vs. Negative Sum Games

If I have an extra cup of coffee, and you have an extra two dollars, we both come out ahead when I agree to sell it to you.

This might sound painfully obvious, but it's an example of the idea I most wish I had been taught in school: the concept of positive and zero-sum games.

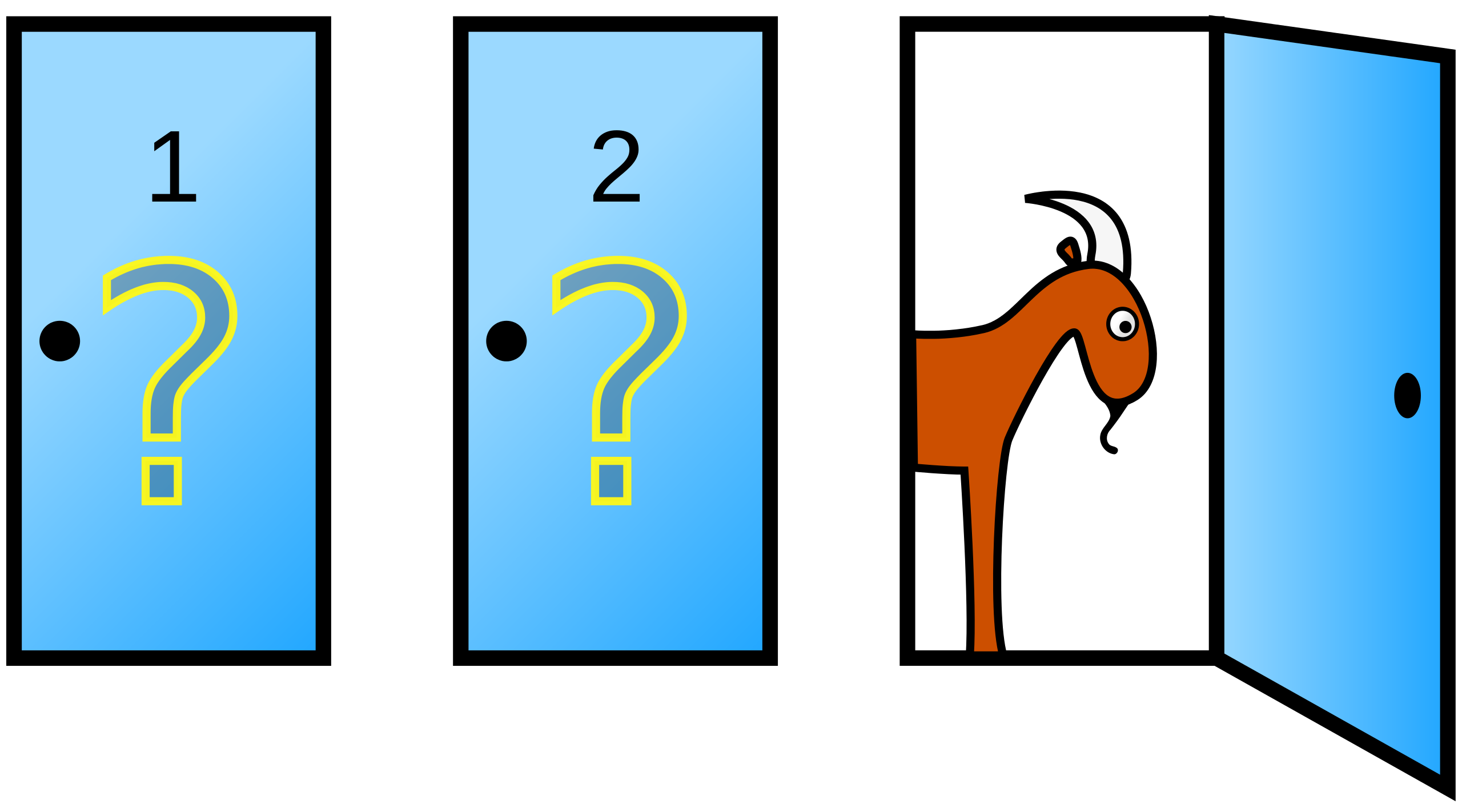

A zero-sum game is one in which the total gained by the winner is equal to the loss endured by the loser. Sports are the most frequently cited example of zero-sum games, since only one team can win. Poker is also zero-sum because it's winner-takes-all. You either lose all your money or win everyone else's.

But this concept isn't limited to games in the strict sense of the word. Politics is also typically a zero-sum game, since only one person can win the race.

Zero-sum games are ferocious, because in the long run, if you don't come out ahead, you lose everything.

Positive-sum games, on the other hand, are the opposite. Two parties playing a positive-sum game both stand to gain something from the interaction, regardless of who "wins."

International trade, for example, is positive sum. If one state has surplus oil and another has surplus corn, both states win by trading if they each want what the other has to offer.

And a lyricist and composer agreeing to collaborate on a new musical are also engaging in a positive-sum game. Perhaps neither can write the next Tony-award-winning musical on their own, but by teaming up, they dramatically increase the potential upside of their efforts.

All else being equal, if you have the choice between playing a negative-sum game and a positive-sum one, you should probably choose the latter.

In a capitalist society, it's easy to fall into the trap of viewing the world as a zero-sum competition: Either you have this dollar, or I do.

But this mindset is a trap. So many areas of our society that seem zero-sum on the surface are really positive-sum.

Have you ever noticed the way in which businesses in competition with one another tend to cluster together? That's because they're often more financially successful in proximity to each other. You might see a Starbucks around the corner from an indie cafe (or even across the street from another Starbucks), or multiple clothing retailers in the same mall.

There are multiple reasons for this, but by aggregating demand for a particular good or service in the optimal part of town, businesses can ultimately attract more customers.

When I worked at a circus school, it was tempting to see ourselves as playing a zero-sum game with the other school in town. But in reality, relatively few people actively seek out circus classes, and most of our new students came from word-of-mouth. This means that the more circus schools there are, the more people will hear that it's a fun way to stay active and learn unusual skills.

Did we lose out on some students to our "competitor?" Absolutely. But ultimately, we were working together to grow the pie — to get more people to realize that they didn't have to grow up in a circus to learn trapeze.

Games don't always fall neatly into one or the other category, and you can even play them in ways that make them fall closer to the positive-sum side.

For example, a couple negotiating a divorce can decide to make some compromises early in the process to minimize how much they lose to lawyer fees. In doing so, they avoid a purely zero-sum fight and each walk away with more money on average.

When you start to recognize how much of life can be played in positive-sum way, you may discover that the range of possibilities to you opens up.